Table of Contents

Core Setup

In this chapter, we will install the first master node: batman (10.0.0.1).

On this node, will be setup directly a repository server, in order to deploy the rest of the core servers and other nodes.

Then we will add the following servers:

- A dhcp server (to provide computes and logins nodes with ip addresses and hostnames, dhcp is part of the PXE boot process)

- A dns server (to provide hostnames/ip resolutions on the newtork)

- A pxe server (to deploy automatically the OS on other nodes)

- A ntp server (to synchronize clock off all systems)

- A ldap server (to manage users)

- A slurm server (the job scheduler)

Note: if you are using a VM for simulating this configuration, set RAM to 1Go minimum to have GUI and be able to choose packages during install.

Installing Centos 7

Core

First, install batman.

You can follow this link for help: http://www.tecmint.com/centos-7-installation/

We will use the following configuration:

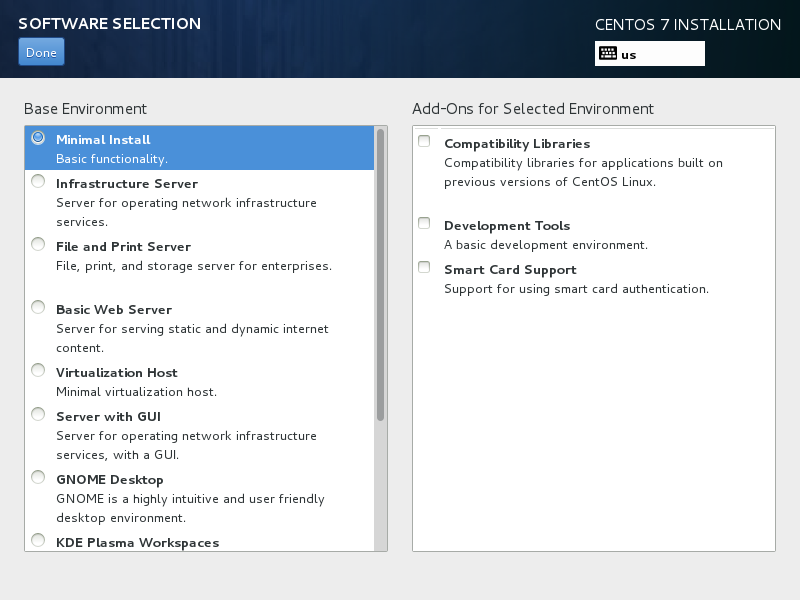

- Minimal installation (not minimal desktop), the bear minimum, no other group of packages. (see the following screenshot).

- Automatic partitioning using standard partitions (you can tune as you wish, and/or use lvm). Consider using 4Gb minimum for the /boot partition. A good partitioning would be:

- 4Gb for /boot, in ext4 (allows to use multiple kernels: can save your life)

- 4Gb for swap

- 30% of remaining space for /var/log, in ext4 (to protect system if logs are larges)

- 70% of remaining space for /, in ext4

- Only root user, no additional user at install.

When finished, after reboot, we first disable NetworkManager. NetworkManager is very useful for specific interfaces like Wifi or other, but we will use only basic network configuration, and NetworkManager may overwrite some of our configuration. It is better to deactivate it.

systemctl disable NetworkManager systemctl stop NetworkManager

Now, you should change root shell to red color. This should be done to protect system from you. When you see red color, you should be more careful (red = root = danger!). Add (for bold and red) this in your bashrc file, located at /root/.bashrc). At the end of the file, add:

Now, you should change root shell to red color. This should be done to protect system from you. When you see red color, you should be more careful (red = root = danger!). Add (for bold and red) this in your bashrc file, located at /root/.bashrc). At the end of the file, add:

PS1="\[\e[01;31m\]\h:\w#\[\e[00;m\] "

This will be taken into account at next login.

The first thing now is to be able to install packages. In order to do that, we will setup the repository server on batman. But first, for commodity, you will need to reach the node by ssh from the admin network, we will setup first a static ip for both batman interfaces, then install the repository server, and then disable and stop the firewall for the time being.

Static ip

First check the name of your interfaces. In this tutorial, they will be enp0s3 for interface on global network, and enp0s8 for interface on admin/HA network. However, on your system, they might be called: enp0s3 and enp0s5, or other names like em0, em1, eht0, etc. You can check your current interfaces with the ip add command. Get the names of your interfaces, and replace here enp0s3 and enp0s8 by their name.

Go to /etc/sysconfig/network-script, and edit ifcfg-enp0s8 to the following:

DEVICE=enp0s8 NAME="enp0s8" TYPE="Ethernet" NM_CONTROLLED=no ONBOOT="yes" BOOTPROTO="static" IPADDR="172.16.0.1" NETMASK=255.255.255.0

Then ifcfg-enp0s3 as following:

DEVICE=enp0s3 NAME="enp0s3" TYPE="Ethernet" NM_CONTROLLED=no ONBOOT="yes" BOOTPROTO="static" IPADDR="10.0.0.1" NETMASK=255.255.0.0

Now restart network to activate the interface:

systemctl restart network

Note: if it fails, reboot node or use ifup/ifdown commands.

If you want to do a backup of ifcfg files, don’t do it in this /etc/sysconfig/network-scripts directory as all files here are read and used by the operating system. You may result with strange behavior. Prefer keeping a copy in a dedicated directory, for example /root/my_old_ifcfg_files

You should now be able to ssh to batman from the admin network using for example the ip 172.16.0.3 for your own desktop/laptop computer.

To finish, set manually hostame of this node (will be used at next reboot):

hostnamectl status hostnamectl set-hostname batman.sphen.local hostnamectl status

And disable firewall (mandatory), we will reactivate it later (optional). Note that depending of your Centos/RHEL installation, firewalld may already be deactivated.

systemctl disable firewalld.server systemctl stop firewalld.server

Repositories server

First server to setup now is repository server. We will use Packages and database inside Centos Everything DVD. Upload the Centos DVD Everything iso on node in /root. Then, we will mount the iso, copy packages and database, and set repository files to let the system know where to look:

mkdir /mnt mount -o loop -t iso9660 /root/CentOS-7-x86_64-Everything-1511.iso /mnt mkdir -p /var/www/html/repo/os_base.local.repo cp -ar /mnt/Packages /var/www/html/repo/os_base.local.repo cp -ar /mnt/repodata /var/www/html/repo/os_base.local.repo restorecon -R /var/www/html/repo

The restorecon command allows us to automatically set SELinux flags to files, so that we can let SELinux enabled. We will use it a lot.

Now, let’s indicate to the node the repository position. Create a new file /etc/yum.repos.d/os_base.local.repo and copy/past the following:

[os_base.local] name=os_base.local.repo baseurl=file:///var/www/html/repo/os_base.local.repo gpgcheck=0 enabled=1

Note: “file” refer to a local position, not using any server. We need to do it this way for now because we didn’t install the http server that will provide the repository on the network, and to install the http server, we need the repository. Only solution is to use a local position for the time being.

Save, and set file rights:

chown root:root /etc/yum.repos.d/os_base.local.repo chmod 0640 /etc/yum.repos.d/os_base.local.repo

We will remove CentOS original repository files, but for safety, let's backup them, and update yum:

mkdir /etc/yum.repos.d.old/ mv /etc/yum.repos.d/CentOS-* /etc/yum.repos.d.old yum clean all yum repo list

Now to finish, let's install and start the http server, to allow other nodes to use this repository using http. We also unmount the iso.

yum install httpd systemctl enable httpd systemctl start httpd umount /mnt

The repository server is up, and listening. We will modify the /etc/yum.repos.d/os_base.local.repo file to replace baseurl=file by the http version, so that batman will access repository using the http server. This will be useful later because it will allow us to simply copy this file on other nodes, without any modifications. Edit /etc/yum.repos.d/os_base.local.repo and replace the baseurl line by:

baseurl=http://10.0.0.1/repo/os_base.local.repo

You can test it works using:

yum clean all yum repo list

To finish, let's create our own repository, to store our home made rpm (phpldapadmin, nagios, slurm, munge, etc).

mkdir /var/www/html/repo/own.local.repo

Install createrepo to generate repository database (we can install rpm now that we made the main repository available):

yum install createrepo delta-rpm

Copy homemade and downloaded rpm (phplapadmin, slurm, nagios, munge, etc) into /var/www/html/repo/own.local.repo, and generate the database using:

createrepo -v --update /var/www/html/repo/own.local.repo

Note: you can add other rpm in the same directory in the future, and use the same command to update database. This is why we added the –update.

Then create the file /etc/yum.repos.d/own.local.repo and copy/past the following:

[own.local] name=own.local.repo baseurl=http://10.0.0.1/repo/own.local.repo gpgcheck=0 enabled=1

Save, and set file rights:

chown root:root /etc/yum.repos.d/own.local.repo chmod 0640 /etc/yum.repos.d/own.local.repo

The repositories are ready.

Dhcp server

Dhcp server is used to assign ip addresses and hostnames to computes nodes and logins nodes. It is also the first server seen by a new node booting in pxe (on the network) for installation. It will indicate to this node where pxe server is, and where dns server is. In this configuration, we assume you now the MAC addresses of your nodes (should be provided by the manufacturer. If not, see login node installation part of the tutorial on how to obtain them manually). Now that we have setup the repository server, things should be easier.

Install the dhcp server package:

yum install dhcp

Do not start it now, let's configure it first. The configuration file is /etc/dhcp/dhcpd.conf. Edit this file, and copy past the following, adjusting your needs:

#

# DHCP Node Configuration file.

# see /usr/share/doc/dhcp*/dhcpd.conf.example

# see dhcpd.conf(5) man page

#

authoritative;

subnet 10.0.0.0 netmask 255.255.0.0 {

range 10.0.254.0 10.0.254.254; # Where unknown hosts go

option domain-name "sphen.local";

option domain-name-nodes 10.0.0.1; # dns node ip

option broadcast-address 10.0.255.255;

default-lease-time 600;

max-lease-time 7200;

next-node 10.0.0.1; # pxe node ip

filename "pxelinux.0";

# List of computes/logins nodes

host login1 {

hardware ethernet 08:00:27:18:68:BC;

fixed-address 10.0.2.1;

option host-name "login1";

}

host login2 {

hardware ethernet 08:00:27:18:68:CC;

fixed-address 10.0.2.2;

option host-name "login2";

}

host compute1 {

hardware ethernet 08:00:27:18:68:EC;

fixed-address 10.0.3.1;

option host-name "compute1";

}

host compute2 {

hardware ethernet 08:00:27:18:68:1C;

fixed-address 10.0.3.2;

option host-name "compute2";

}

host compute3 {

hardware ethernet 08:00:27:18:68:2C;

fixed-address 10.0.3.3;

option host-name "compute3";

}

host compute4 {

hardware ethernet 08:00:27:18:68:3C;

fixed-address 10.0.3.4;

option host-name "compute4";

}

}

Note the range parameter. With this configuration, all new hosts asking for an ip whose MAC isn’t known by the dhcp server will be given a random address in this range. It will help later to identify some nodes we want to install using PXE but don’t want ip to be configured trough dhcp, like for nfs node.

You can refer to online documentation for further explanations. The important part here are the nodes ip, the ranges covered by the dhcp, and the list of hosts MAC covered by the DHCP server with their assigned ip and hostnames. When booting in pxe mode, a node will get all servers ip.

Now, you can start and enable the dhcp server:

systemctl enable dhcpd systemctl start dhcpd

More resources:

Your dhcp server is ready.

Dns server

DNS server provides on the network:

- ip for corresponding hostname

- hostname for corresponding ip

DNS is important as it clearly simplify systems configuration, and provides flexibility (using hostname instead of static ip, you can change the ip of a node, for maintenance purposes for example, and just adjust dns settings, the others nodes will not see differences and production can continue). In the first part of this tutorial, we will not use DNS a lot, but we will use it later when increasing flexibility.

Install dns server package:

yum install bind bind-utils

Now the configuration. It includes 3 files: main configuration file, forward file, and reverse file. (you can separate files into more if you wish, not needed in this tutorial).

Main configuration file is /etc/named.conf. Edit it and add the following parameters (listen-on, allow-query, and zones):

//

// named.conf

//

// Provided by Red Hat bind package to configure the ISC BIND named(8) DNS

// node as a caching only namenode (as a localhost DNS resolver only).

//

// See /usr/share/doc/bind*/sample/ for example named configuration files.

//

options {

listen-on port 53 { 127.0.0.1; 10.0.0.1;};

listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

allow-query { localhost; 10.0.0.0/16;};

/*

- If you are building an AUTHORITATIVE DNS node, do NOT enable recursion.

- If you are building a RECURSIVE (caching) DNS node, you need to enable

recursion.

- If your recursive DNS node has a public IP address, you MUST enable access

control to limit queries to your legitimate users. Failing to do so will

cause your node to become part of large scale DNS amplification

attacks. Implementing BCP38 within your network would greatly

reduce such attack surface

*/

recursion no;

dnssec-enable yes;

dnssec-validation yes;

dnssec-lookaside auto;

/* Path to ISC DLV key */

bindkeys-file "/etc/named.iscdlv.key";

managed-keys-directory "/var/named/dynamic";

pid-file "/run/named/named.pid";

session-keyfile "/run/named/session.key";

};

logging {

channel default_debug {

file "data/named.run";

severity dynamic;

};

};

zone "." IN {

type hint;

file "named.ca";

};

zone"sphen.local" IN {

type master;

file "forward";

allow-update { none; };

};

zone"0.10.in-addr.arpa" IN {

type master;

file "reverse";

allow-update { none; };

};

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";

Recursion is disabled because no internet access is available. The server listens on 10.0.0.1, and allows queries from 10.0.0.0/16. What contains our names and ip are the 2 last zone parts. They refer to the two other files: forward and reverse. These files are located in /var/named/.

Note that the 0.10.in-addr.arpa is related to first part of our range of ip. If cluster was using for example 172.16.x.x ip range, then it would have been 16.172.in-addr.arpa.

First file is /var/named/forward. Create it and add the following:

$TTL 86400

@ IN SOA batman.sphen.local. root.sphen.local. (

2011071001 ;Serial

3600 ;Refresh

1800 ;Retry

604800 ;Expire

86400 ;Minimum TTL

)

@ IN NS batman.sphen.local.

@ IN A 10.0.0.1

batman IN A 10.0.0.1

login1 IN A 10.0.2.1

login2 IN A 10.0.2.2

compute1 IN A 10.0.3.1

compute2 IN A 10.0.3.2

compute3 IN A 10.0.3.3

compute4 IN A 10.0.3.4

nfs1 IN A 10.0.1.1

Second one is /var/named/reverse. Create it and add the following:

$TTL 86400

@ IN SOA batman.sphen.local. root.sphen.local. (

2011071001 ;Serial

3600 ;Refresh

1800 ;Retry

604800 ;Expire

86400 ;Minimum TTL

)

@ IN NS batman.sphen.local.

@ IN PTR sphen.local.

batman IN A 10.0.0.1

1.0 IN PTR repo1.sphen.local.

1.2 IN PTR login1.sphen.local.

2.2 IN PTR login2.sphen.local.

1.3 IN PTR compute1.sphen.local.

2.3 IN PTR compute2.sphen.local.

3.3 IN PTR compute3.sphen.local.

4.3 IN PTR compute4.sphen.local.

1.1 IN PTR nfs1

You can observe the presence of local domain name, sphen.local, and that all hosts are declared here in forward and reverse order (ot allow DNS to provide ip for hostname, or the opposite). Important: when using tools like dig or nslookup, you need to use full domain name of hosts. For example, dig login1.sphen.local. When using ping or ssh or other tools, only login1 is enough.

Set rights on files:

chgrp named -R /var/named chown -v root:named /etc/named.conf restorecon -rv /var/named restorecon /etc/named.conf

Time now to start server:

systemctl enable named systemctl start named

Then edit /etc/resolv.conf as following, to tell batman host where to find dns:

search sphen.local namenode 10.0.0.1

You can try to ping batman node.

One last thing is to lock the /etc/resolv.conf file. Why? Because depending of your system configuration, some other programs may edit it, and break your configuration. We will make this file read-only, using:

chattr +i /etc/resolv.conf

To unlock the file later, simply use:

chattr -i /etc/resolv.conf

DNS is ready.

Pxe server

The pxe server purpose is to allow nodes to deploy a linux operating system, without having to install everything manually and one by one using a DVD or an USB key.

The pxe server host the minimal kernel for pxe booting, the kickstart file for remote hosts to know how they should be installed, and the minimal centos 7 iso for minimal packages distribution. You need to tune the kickstart file if you desire to design a specific installation (what rpm to install, how partitions should be made, running pre/post scripts, etc).

This section assumes your nodes are able to boot in PXE (which is the case for most of professional/industrial equipment). If not, you will need to skip this part and install all nodes manually. Bad luck for you. Note that all hypervisors can handle PXE booting for VM. If you are using Virtualbox, press F12 at boot of VM, then l and VM will boot on PXE (but only on first interface).

Upload the CentOS-7-x86_64-Minimal-1511.iso (or the RHEL DVD if you are using RHEL) file in /root, we will mount it.

mkdir /mnt mount -o loop -t iso9660 /root/CentOS-7-x86_64-Minimal-1511.iso /mnt

Install needed servers:

yum install tftp yum install tftp-server

Note that we are using tftp server directly, without relying on xinetd. After months of tests, I prefer using tftp directly, especially on Centos 7 and above (xinetd does not works as expected).

Edit file /usr/lib/systemd/system/tftp.service and enable verbose for tftp server by adding –verbose in it, this will be VERY useful later:

[Unit] Description=Tftp Server Requires=tftp.socket Documentation=man:in.tftpd [Service] ExecStart=/usr/sbin/in.tftpd -s /var/lib/tftpboot --verbose StandardInput=socket [Install] Also=tftp.socket

Then reload systemd and start tftp server:

systemctl daemon-reload systemctl start tftp systemctl enable tftp

Now, let's install pxe files part:

yum install syslinux httpd

Copy needed files into desired locations:

cp -v /usr/share/syslinux/pxelinux.0 /var/lib/tftpboot cp -v /usr/share/syslinux/menu.c32 /var/lib/tftpboot cp -v /usr/share/syslinux/memdisk /var/lib/tftpboot cp -v /usr/share/syslinux/mboot.c32 /var/lib/tftpboot cp -v /usr/share/syslinux/chain.c32 /var/lib/tftpboot mkdir /var/lib/tftpboot/pxelinux.cfg mkdir /var/lib/tftpboot/netboot/ mkdir -p /var/www/html/iso cp /mnt/images/pxeboot/vmlinuz /var/lib/tftpboot/netboot/ cp /mnt/images/pxeboot/initrd.img /var/lib/tftpboot/netboot/ restorecon -R /var/lib/tftpboot cp -Rv /mnt/* /var/www/html/iso restorecon -R /var/www/html/iso

Generate a root ssh public key to be able to install it as an authorized_key during kickstart process (this will allow to login on nodes using ssh directly after a fresh install).

ssh-keygen -N ""

Just use “Enter” to answer questions, and do not set any pass-phrase. Then get the content of /root/.ssh/id_rsa.pub file, it will be needed for the kickstart file.

Now generate a root password hash for remote nodes (the password you enter here will be the root password of nodes installed by pxe), using:

python -c "import crypt,random,string; print crypt.crypt(raw_input('clear-text password: '), '\$6\$' + ''.join([random.choice(string.ascii_letters + string.digits) for _ in range(16)]))"

Get the hash, it will be needed for the kickstart file also.

Now add kickstart and pxelinux files. Start with kickstart file /var/www/http/ks.cfg, using the following:

# version=RHEL7 # System authorization information auth --enableshadow --passalgo=sha512 # Do not use graphical install text # Run the Setup Agent on first boot firstboot --enable # Keyboard layouts, set your own #keyboard --vckeymap=fr --xlayouts='fr' keyboard --vckeymap=us --xlayouts='us' # System language lang en_US.UTF-8 # Network information network --bootproto=dhcp --device=enp0s3 --ipv6=auto --activate network --hostname=localhost.localdomain # Root password, the hash generated before rootpw --iscrypted $6$rJ2xMRxbzIk6pBjL$fSClcUjfftsd7WLdilG6FVgjtcN1y5g3Dpl0Z2NQVHcNgWNgQmI1xU5L8ullHv59sLsmbRQAGj8KMP1H1Sg3Q. # System timezone timezone Europe/Paris --isUtc # System bootloader configuration bootloader --append=" crashkernel=auto" --location=mbr --boot-drive=sda # Partition information, here 1 disk only. grow means “take remaining space” ignoredisk --only-use=sda clearpart --all --initlabel --drives=sda part /boot --fstype=ext4 --size=4096 part / --fstype=ext4 --size=1000 --grow #part swap --size=4000 # We don’t want to use swap # Reboot after installation reboot %packages @core %end # Replace here the ssh-key by the one in your id_rsa.pub %post mkdir /root/.ssh cat << xxEOFxx >> /root/.ssh/authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDd/D8djP1pi56KQokGb3V2KWU7sEFP4oHNh7MlKPp4ZdkQUa7wfR3xbsDYiEN+UrF9AEHGqUF3DHhJMhj+soXuBysIIimZyDLPn5GoHanQ/FtjKPVpiRpTcxprDVtuhcDOKbl58aSXCsfDM3cahUm0Y0Jk+Dp84NDWc7Ve0SOtCgWchYmgJUExYNBDWFPcZSRs20nQZ2aShFZemqzkKY2cgIR5PYvdwr+A9ZCrjNkmW02K2gk+qRdIYW2sVmMictmY6KrrbtYIiucsSpC805wGk+V4+DkDOJek9a9EH0/6n0CShXVjpXjsbJ9/Y4xA/qIBl7oizEImsZ8rYCT4pkz/ root@batman.sphen.local xxEOFxx restorecon -R -v /root/.ssh %end

Few important comments:

- This file is important and should be setup according to your needs. (For example, I chose to avoid swap on compute and login, so I commented it here.)

- Replace “rootpw –iscrypted $6$rJ2xMRxbzIk6p…” by the root password generated hash (we did it before) you wish to set on nodes. Remember; use the following command to generate it:

python -c "import crypt,random,string; print crypt.crypt(raw_input('clear-text password: '), '\$6\$' + ''.join([random.choice(string.ascii_letters + string.digits) for _ in range(16)]))"

Or also:

python -c 'import crypt; print crypt.crypt("password", "$6$saltsalt$")'

- Replace “ssh-rsa AAAAB3NzaC…” by the public ssh root key of the main node batman (we got the content of the id_rsa.pub file before).

- Replace keyboard keymap by your needs. Here it's us/qwerty.

- Replace time zone by yours. This point is very important ! Time zone set here must be exactly the same than the one set on the master node (batman). If not, nodes will not be on the same time zone, and all security tools will complain about it. If you don't remember which one you choose, use: ll /etc/localtime, this command will provide you a link target, where the target is your time zone.

- Adjust partitions to your need. If you don't know what will be nodes disk label, replace by:

# System bootloader configuration bootloader --append=" crashkernel=auto" --location=mbr # Partition information, here 1 disk only. grow means “take remaining space” clearpart --all --initlabel part /boot --fstype=ext4 --size=4096 part / --fstype=ext4 --size=1000 --grow

Now set rights to this file:

chmod 0644 /var/www/html/ks.cfg restorecon /var/www/html/ks.cfg

Now let's work on the pxelinux file. This file tells remote node where needed files (iso, etc) can be downloaded. Edit /var/lib/tftpboot/pxelinux.cfg/default as following:

default menu.c32 prompt 0 timeout 60 MENU TITLE sphen PXE # default centos7_x64 default local_disk LABEL centos7_x64 MENU LABEL CentOS 7 X64 KERNEL /netboot/vmlinuz APPEND initrd=/netboot/initrd.img inst.repo=http://10.0.0.1/iso ks=http://10.0.0.1/ks.cfg console=tty0 console=ttyS1,115200 LABEL localdisk KERNEL chain.c32 APPEND hd0

We added console=tty0 console=ttyS1,115200 after APPEND to get ipmi console view. But for some servers, you may need to replace ttyS1 by ttyS0 or ttyS2 to view all OS installation process.

How this file works will be described later, in compule/login nodes installation part.

Set rights:

chmod 0644 /var/lib/tftpboot/pxelinux.cfg/default restorecon /var/lib/tftpboot/pxelinux.cfg/default

Adjust rights on tftpboot:

chmod -R 755 /var/lib/tftpboot

Now start servers:

systemctl restart httpd systemctl enable httpd

The PXE stack is ready, but not activated. We will activate it on demand later.

Debug

If you get troubles with tftp server, you can try to download a file from it to see if it works at least locally, using (use “quit” to exit):

tftp localhost get pxelinux.0

Check /var/log/messages: with -v, tftp server is very verbose. Check also if tftp server is listening, using (if nothing appears, then it's off):

netstat -unlp | grep tftp netstat -a | grep tftp

Also, with some configuration, I have seen that 755 rights gives a Permission denied. Replace it with 777 if really needed (not secure).

Resources: https://www.unixmen.com/install-pxe-node-centos-7/

Ntp server

The ntp server provides date and time to ensure all nodes/nodes are synchronized. This is VERY important, as many authentication tools will not work if cluster is not clock synchronized.

Install needed packages:

yum install ntp

We will allow the local network to query time from this server. Edit /etc/ntp.conf and add a restrict on 10.0.0.1 and also, to make the server trust its own clock if no other ntp server is reachable on site, comment web servers and add a fake server on localhost by asking ntp to use it's own clock on 127.127.1.0. Stratum is set high because we can consider it to be a poor quality time clock. Part of the file to be edited should be like this (note that we commented centos servers using #):

# Permit all access over the loopback interface. This could # be tightened as well, but to do so would effect some of # the administrative functions. restrict 127.0.0.1 restrict ::1 # Hosts on local network are less restricted. restrict 10.0.0.0 mask 255.255.0.0 nomodify notrap # Use public servers from the pool.ntp.org project. # Please consider joining the pool (http://www.pool.ntp.org/join.html). #server 0.centos.pool.ntp.org iburst #server 1.centos.pool.ntp.org iburst #server 2.centos.pool.ntp.org iburst #server 3.centos.pool.ntp.org iburst server 127.127.1.0 fudge 127.127.1.0 stratum 14

Then start and enable server:

systemctl start ntpd systemctl enable ntpd

NTP is now ready.

Ldap server

Core

LDAP server provide centralized user authentication on the cluster. We will setup a basic LDAP server, and (optional) install phpldapadmin, a web interface used to manage LDAP database. We will also activate SSL to protect authentication on the network.

Install packages:

yum install openldap-nodes openldap-clients

Then prepare ldap server:

cp /usr/share/openldap-nodes/DB_CONFIG.example /var/lib/ldap/DB_CONFIG chown ldap. /var/lib/ldap/DB_CONFIG systemctl start slapd systemctl status slapd

Then, generate a (strong) password hash using slappasswd (this password will be the one of the Manager of the ldap database), and keep it for next step:

slappasswd

Then, in /root, create chdomain.ldif file, in which we will define a new admin (root) user for LDAP database, called Manager, and provide to slapd its password. Use the hash get at previous step to replace the one here (be carefull, ldap do not accept any changes in tabulation, spaces, or new lines):

dn: olcDatabase={1}monitor,cn=config

changetype: modify

replace: olcAccess

olcAccess: {0}to * by dn.base="gidNumber=0+uidNumber=0,cn=peercred,cn=external,cn=auth"

read by dn.base="cn=Manager,dc=sphen,dc=local" read by * none

dn: olcDatabase={2}hdb,cn=config

changetype: modify

replace: olcSuffix

olcSuffix: dc=sphen,dc=local

dn: olcDatabase={2}hdb,cn=config

changetype: modify

replace: olcRootDN

olcRootDN: cn=Manager,dc=sphen,dc=local

dn: olcDatabase={2}hdb,cn=config

changetype: modify

add: olcRootPW

olcRootPW: {SSHA}+u5uxoAJlGZd4e5m5zfezefbzebnebzgfz

dn: olcDatabase={2}hdb,cn=config

changetype: modify

add: olcAccess

olcAccess: {0}to attrs=userPassword,shadowLastChange by

dn="cn=Manager,dc=sphen,dc=local" write by anonymous auth by self write by * none

olcAccess: {1}to dn.base="" by * read

olcAccess: {2}to * by dn="cn=Manager,dc=sphen,dc=local" write by * read

Then, create basedomain.ldif file, in which we will define structure of our base (People for users, and Group for their groups):

dn: dc=sphen,dc=local objectClass: top objectClass: dcObject objectclass: organization o: sphen local dc: sphen dn: cn=Manager,dc=sphen,dc=local objectClass: organizationalRole cn: Manager description: Directory Manager dn: ou=People,dc=sphen,dc=local objectClass: organizationalUnit ou: People dn: ou=Group,dc=sphen,dc=local objectClass: organizationalUnit ou: Group

Now populate database. Last command will need the password used to generate the hash before:

ldapadd -Y EXTERNAL -H ldapi:/// -f /etc/openldap/schema/cosine.ldif ldapadd -Y EXTERNAL -H ldapi:/// -f /etc/openldap/schema/nis.ldif ldapadd -Y EXTERNAL -H ldapi:/// -f /etc/openldap/schema/inetorgperson.ldif ldapmodify -Y EXTERNAL -H ldapi:/// -f chdomain.ldif ldapadd -x -D cn=Manager,dc=sphen,dc=local -W -f basedomain.ldif

To finish, let’s create a basic user, for testing (managing users will be explained later), by creating a file myuser.ldif. The user will be “myuser”, and we will use the id 1101 for this user. You must use only one id per user, and use an id greater than 1000 (under 1000 is for system users). First, generate a password hash for the new user using:

slappasswd

Then fill the myuser.ldif file:

dn: uid=myuser,ou=People,dc=sphen,dc=local

objectClass: inetOrgPerson

objectClass: posixAccount

objectClass: shadowAccount

cn: myuser

sn: Linux

userPassword: {SSHA}RXJnfvyeHf+G48tiezfzefzefzefzef

loginShell: /bin/bash

uidNumber: 1101

gidNumber: 1101

homeDirectory: /home/myuser

dn: cn=myuser,ou=Group,dc=sphen,dc=local

objectClass: posixGroup

cn: hpc-group

gidNumber: 1101

memberUid: myuser

Here we create a user and it's own group with the same name and the same id than the user.

Add it to database:

ldapadd -x -D cn=Manager,dc=sphen,dc=local -W -f myuser.ldif

Managing users will be explained later. However, using files like could be difficult with many users; we can install a web interface to manage users and groups (optional). If you do not wish to install phpldapadmin, then go to the next part, SSL. You can also create your own CLI for LDAP, using for example whiptail/dialog.

phpldapadmin (optional)

Install now phpldapadmin, which will be our web interface to manage users (we downloaded rpm before, in preparation page).

yum install phpldapadmin

Then, edit /etc/phpldapadmin/config.php file by applying the following patch (we switch from uid to dn). Remember the installation preparation page, to apply a patch, create a patch.txt file, fill it with the patch, and then apply it on desired file using: patch /etc/phpldapadmin/config.php < patch.txt.

--- config.php 2016-06-13 09:49:53.877068683 -0400

+++ /etc/phpldapadmin/config.php 2016-06-13 09:50:03.514000554 -0400

@@ -394,8 +394,8 @@

Leave blank or specify 'dn' to use full DN for logging in. Note also that if

your LDAP node requires you to login to perform searches, you can enter the

DN to use when searching in 'bind_id' and 'bind_pass' above. */

-// $nodes->setValue('login','attr','dn');

-$nodes->setValue('login','attr','uid');

+$nodes->setValue('login','attr','dn');

+//$nodes->setValue('login','attr','uid');

/* Base DNs to used for logins. If this value is not set, then the LDAP node

Base DNs are used. */

And also edit /etc/httpd/conf.d/phpldapadmin.conf with the following patch, where we define which nodes can query the LDAP server, here all nodes on 10.0.0.0/16.

--- phpldapadmin.conf 2016-06-13 09:53:31.580529914 -0400

+++ /etc/httpd/conf.d/phpldapadmin.conf 2016-06-13 09:53:47.934414342 -0400

@@ -14,7 +14,7 @@

# Apache 2.2

Order Deny,Allow

Deny from all

- Allow from 127.0.0.1

+ Allow from 127.0.0.1 10.0.0.0/16

Allow from ::1

</IfModule>

</Directory>

Before restarting all servers, we need to adjust an SELinux parameter, to allow httpd to access slapd. Check SELinux ldap related booleans:

# getsebool -a | grep ldap authlogin_nsswitch_use_ldap --> off httpd_can_connect_ldap --> off

We need httpd_can_connect_ldap to be on.

setsebool httpd_can_connect_ldap 1

Now check again:

# getsebool -a | grep ldap authlogin_nsswitch_use_ldap --> off httpd_can_connect_ldap --> on

Now, restart httpd and slapd, and you should be able to connect to http://localhost/phpldapadmin.

User: cn=Manager,dc=sphen,dc=local

Password: The one you used previously when using slappasswd

SSL

Let's finish LDAP installation by enabling SSL for exchanges between clients and main LDAP server. We first need to generate a certificate:

cd /etc/pki/tls/certs make node.key openssl rsa -in node.key -out node.key make node.csr openssl x509 -in node.csr -out node.crt -req -signkey node.key -days 3650 mkdir /etc/openldap/certs/ cp /etc/pki/tls/certs/node.key /etc/pki/tls/certs/node.crt /etc/pki/tls/certs/ca-bundle.crt /etc/openldap/certs/ chown ldap. /etc/openldap/certs/node.key /etc/openldap/certs/node.crt /etc/openldap/certs/ca-bundle.crt

Now create a file called mod_ssl.ldif:

dn: cn=config changetype: modify add: olcTLSCACertificateFile olcTLSCACertificateFile: /etc/openldap/certs/ca-bundle.crt - replace: olcTLSCertificateFile olcTLSCertificateFile: /etc/openldap/certs/node.crt - replace: olcTLSCertificateKeyFile olcTLSCertificateKeyFile: /etc/openldap/certs/node.key

And add it into ldap and restart server:

ldapmodify -Y EXTERNAL -H ldapi:/// -f mod_ssl.ldif systemctl restart slapd

That all for LDAP server.

Munge and Slurm

Munge is used by slurm to authenticate on nodes. The munge key need to be the same on all nodes and nodes, and they must be time synchronized (using ntp). First install munge.

Munge

Install munge from your own repository of from my repository:

yum install munge munge-libs

Then generate a munge key. According to munge web site, a good method is to grab random numbers:

dd if=/dev/urandom bs=1 count=1024 > /etc/munge/munge.key

Now configure munge, create needed directories and start and enable service :

chmod 0400 /etc/munge/munge.key mkdir /var/run/munge chown munge:munge /var/run/munge -R chmod -R 0755 /var/run/munge systemctl start munge systemctl enable munge

Note that it is possible to test munge on the node using munge -n.

Slurm server

Time to setup slurm, the job scheduler. Slurm will handle all jobs dispatching on the compute nodes and ensure maximum usage of compute resources. It is an essential component of the cluster.

The slurm master server running on batman is slurmctld. The server running on compute nodes will be slurmd. No server will be running on login nodes, slurm will simply be installed on them and munge key propagated to allow them to submit jobs. All nodes will share the same configuration file. Note that in this tutorial, the slurm configuration will be basic. You can do much more (for example managing how much hours users can use, etc).

Add slurm user:

groupadd -g 777 slurm useradd -m -c "Slurm workload manager" -d /etc/slurm -u 777 -g slurm -s /bin/bash slurm

Install slurm:

yum install slurm slurm-munge

Now, create the slurm configuration file /etc/slurm/slurm.conf, and copy/past the following (there is also an example file in /etc/slurm):

ClusterName=sphencluster ControlMachine=batman SlurmUser=slurm SlurmctldPort=6817 SlurmdPort=6818 AuthType=auth/munge CryptoType=crypto/munge StateSaveLocation=/etc/slurm/SLURM SlurmdSpoolDir=/var/log/slurm/spool_slurmd SlurmctldPidFile=/var/run/slurmctld.pid SlurmdPidFile=/var/run/slurmd.pid CacheGroups=0 ReturnToService=0 SlurmctldTimeout=350 SlurmdTimeout=350 InactiveLimit=0 MinJobAge=350 KillWait=40 Waittime=0 MpiDefault=pmi2 SchedulerType=sched/backfill SelectType=select/cons_res SelectTypeParameters= CR_Core_Memory,CR_CORE_DEFAULT_DIST_BLOCK FastSchedule=1 SlurmctldDebug=5 SlurmctldLogFile=/var/log/slurm/slurmctld.log SlurmdDebug=5 SlurmdLogFile=/var/log/slurm/slurmd.log.%h JobCompType=jobcomp/none NodeName=mycompute[1-30] Procs=12 State=UNKNOWN PartitionName=basiccompute Nodes=mycompute[1-28] Default=YES MaxTime=INFINITE State=UP PartitionName=gpucompute Nodes=mycompute[29-30] Default=YES MaxTime=INFINITE State=UP

Tune the file according to your needs (i.e. compute resources and partitions, cluster name, etc). In this example, compute1 to compute30 have 12 cores available for calculations, and compute 29 and 30 have a GPU. You cannot use more cores than physically available on nodes. If you specify more, node will go in drain mode automatically (explained in managing the cluster part).

And configure few things:

mkdir /var/spool/slurmd chown -R slurm:slurm /var/spool/slurmd mkdir /etc/slurm/SLURM chown -R slurm:slurm /etc/slurm/SLURM chmod 0755 -R /var/spool/slurmd mkdir /var/log/slurm/ chown -R slurm:slurm /var/log/slurm/

Then start slurmctld server:

systemctl start slurmctld systemctl enable slurmctld

Again, do not start slurmd on batman as it is only for compute nodes.

To test when failing to start, you can launch server manually and use -D -vvvvvv:

slurmctld -D -vvvvvv

That’s all for the bare minimum. We can now proceed with deploying the nfs (io) node that will host the /home and /hpc-softwares data spaces, then deploy login nodes and finally deploy compute nodes. After that, it will be time to manage the cluster and start compiling the basic HPC libraries.