User Tools

Site Tools

Site Tools

Linux HPC Cluster

This tutorial tries to explain how to install a basic HPC cluster. First part will be dedicated to core installation, i.e. the bare minimum. Then additional features will be proposed.

This tutorial will focus on simplicity, lightness and security. All softwares used are very common and when facing an error, a quick look on the web will most of the time solves the issue.

Few words on names used:

- A server refers to a physical machine when speaking about hardware, or to a daemon listening on the network when speaking about software. (Example: a Super Micro Twin2 server refers to a physical server, whose vendor can be Super Micro. A dhcp server refers to a software deamon running on a management node and listening on the network, waiting for requests of all dhcp clients.) To simplify this tutorial, a node will refer to a physical server, and a server to a software daemon that is listening on the network.

- A node refer to a physical server

- A system refer to the operating system on a node

- A service refers to a software daemon running on a node and managed using the operating system service manager (here systemd). Most of software servers are used as services, which simplify their usage a lot.

- Depending of your system, Network Interfaces (NIC) names can be different. In this tutorial, we will assume NIC names are enp0sx, but you may face different names, like ethx, or emx, etc.

- Last but not least, the system administrator, or sysadmin, will be you, the person in charge of managing the cluster.

What a “classic” HPC cluster is dedicated of? Purpose is to provide users compute resources. Users will be able to login on dedicated nodes (called login nodes), upload their code and data, then compile their code, and launch jobs (calculations) on the cluster.

What a “classic” HPC cluster is made of? Most of the time, a cluster is composed of:

- An administration node (pet), whose purpose is to host all core servers of the cluster. On this administration node will be hosted multiple servers, like:

- The NTP, whose purpose is to provide time and date to all nodes and keep everyone synchronized. Having the same time is crucial as all authentications are based on time.

- The DHCP, whose purpose is to provide all no-management nodes an IP on the network, depending of their MAC address.

- The DNS, whose purpose is to provide relation between nodes names (hostname) and ip.

- The job scheduler Slurm, whose purpose is to manage compute resources and optimize allocation of this resources for all jobs.

- The repository, whose purpose is to provide rpm (system softwares) to all systems.

- The PXE stack, whose purpose is to deploy basic OS on other nodes.

- The LDAP, whose purpose is to provide all systems users authentication and keep users synchronized on the entire cluster.

- The Nagios (optional), whose purpose is to monitor health of other nodes and keep system administrator informed of incidents

- IO nodes (pet), whose purpose is to provide storage space to users. Basic storage is based on NFS, and advanced storage (optional) on parallel file systems in this tutorial.

- Login nodes (cattle), whose purpose is to be the place where users work on the cluster and interact with the job scheduler and manage their data.

- Compute nodes (cattle), whose purpose is to provide calculation resources.

(Original black and white image from Roger Rössing, otothek_df_roe-neg_0006125_016_Sch%C3%A4fer_vor_seiner_Schafherde_auf_einer_Wiese_im_Harz.jpg)

(Original black and white image from Roger Rössing, otothek_df_roe-neg_0006125_016_Sch%C3%A4fer_vor_seiner_Schafherde_auf_einer_Wiese_im_Harz.jpg)

An HPC cluster can be seen like a sheep flock. The admin sys (shepherd), the management/io node (shepherd dog), and the compute/login nodes (sheep). This leads to two types of nodes, like cloud computing: pets (shepherd dog) and cattle (sheep). While the safety of your pets must be absolute for good production, losing cattle is common and considered normal.

In HPC, most of the time, management node, file system (io) nodes, etc, are considered as pets. On the other hand, compute nodes and login nodes are considered cattle.

Same philosophy apply for file systems: some must be safe, others can be faster but “losable”, and users have to understand it and take precautions. In this tutorial, /home will be considered safe, and /scratch fast but losable.

An HPC cluster can be seen like a sheep flock. The admin sys (shepherd), the management/io node (shepherd dog), and the compute/login nodes (sheep). This leads to two types of nodes, like cloud computing: pets (shepherd dog) and cattle (sheep). While the safety of your pets must be absolute for good production, losing cattle is common and considered normal.

In HPC, most of the time, management node, file system (io) nodes, etc, are considered as pets. On the other hand, compute nodes and login nodes are considered cattle.

Same philosophy apply for file systems: some must be safe, others can be faster but “losable”, and users have to understand it and take precautions. In this tutorial, /home will be considered safe, and /scratch fast but losable.

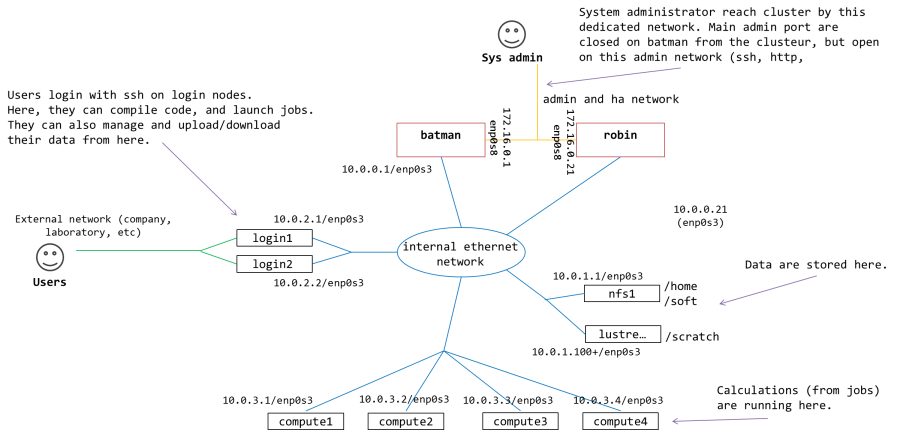

The cluster structure will be as follows:

In this configuration, there will be one master node (and optional a second one for HA). One NFS node will be deployed, for /home and /hpc-softwares (and another optional io node for fast file system, /scratch, with a parallel file system). The Slurm job scheduler will be used, and an LDAP server with web interface will also be installed for user managements. Login nodes and calculation nodes will then be deployed on the fly with PXE. The cluster will be monitored (optional) using Nagios.

All services can be divided in dedicated VM, for easy backup, test and update.

Network information (IP will be the same for both type of cluster) :

- batman : 10.0.0.1 (on first network interface, enp0s3)

- robin : 10.0.0.21 (enp0s3)

- Storage will use 10.0.1.x range

- Login will use 10.0.2.x range

- Compute will use 10.0.3+.x range

Netmask will be 255.255.0.0 (/16)

Domain name will be sphen.local

Note: if you plan to test this tutorial in virtualbox, 10.0.X.X range may already been taken by virtualbox NAT. In this case, use 10.1.X.X for this tutorial.

The administration (and optional HA) network will be 172.16.0.1 for batman (on enp0s8) and 172.16.0.21 for optional robin (on enp0s8). Netmask will be /24 on this network. We will connect on this network to manage cluster, and when enforcing security, connection to management nodes will only be possible through this network.

The infiniband network (optional) will be with same ip pattern than Ethernet network, but with 10.100.x.x/16 range.

All nodes will be installed with a minimal install Centos 7.2 (but this tutorial also works fine with an RHEL 7.2 and should also work with higher versions of RHEL/Centos 7). Few tips are provided in the Prepare install page. I provide some rpm for system administration (munge, slurm, nagios, etc) and other rpm for HPC softwares (gcc, fftw, etc).

Final notes before we start:

- This tutorial assumes you do not reach Internet from the cluster. All tools will be downloaded and/or built first. Only slurm is needed for the bare minimum part.

- In this first version, SELinux is set to enforcing, and Iptables is used instead of firewalld but turned off (turned on later in an optional security enforcing part). When facing permission denied, try setting SELinux into permissive mode to check if that's the reason, or check selinux logs.

- If you get “Pane is dead” during pxe install, most of the time increase RAM to minimum 1024 Go and it will be ok.

- Login nodes will be vulnerable as users can login on them, and they are exposed to an “untrusted” network. We will setup basic defenses on these nodes.

- You can edit files using vi which is a powerful tool, but if you feel more comfortable with, use nano (nano myfile.txt, then edit file, then use Ctrl+O to save, and Ctrl+X to exit).

Some parts are optional, feel free to skip them. Also, it is strongly recommended to install first the bare minimum cluster (management node with repository, DHCP, DNS, PXE, NTP, ldap, Slurm, then nfs node, then login and compute nodes), check if it works, and only after that install the optional parts (nagios, parallel file system, etc).