User Tools

Site Tools

Site Tools

Table of Contents

Managing the cluster

We have seen how to install master node (batman), how to configure network, how to install io nodes, compute nodes, and login nodes. It is now time to start using all of that, and first with how to handle pxe boot and automate it. You will find also here a way to “catch” MAC addresses of servers if you don't know them.

Getting nodes MAC

In the current configuration, all nodes booting and asking an ip to the DHCP will be able to boot in PXE. The nodes whose MAC is registered in the dhcp configuration file will be given the correct ip (login and compute), and other nodes will be given a temporary ip in the dedicated range, 10.0.254.x, as specified in the dhcp configuration file.

So we need to get the MAC of nodes to provide them to the DHCP server to ensure good MAC/IP relation for compute and login nodes. To get compute and login nodes MAC address, there are multiple ways:

- Ask vendor (vendor must provide them to you)

- See if it is not written on the node (some nodes have a small drawer)

- Check from the BMC of the server (assuming you know how to reach BMC)

- Ask to the ethernet switch if it is manageable

- Or use dhcp logs.

Dhcp logs method always works, but takes time, so it is not adapted to large clusters, but will be enough for this tutorial. Note also that you can easily make a script that catch these MAC in the logs for you.

Here is how to use dhcp logs method: when a node boot on PXE, it send it's MAC to the DHCP server. This operation is logged by DHCP server in /var/log/messages. Idea is the following:

- Open a shell on management server (where dhcp server is, here batman), and use the following command:

tail -f /var/log/messages | grep -i DHCPDISCOVER

- Start the node which you want to get MAC and make it boot on PXE (or if you want to get a MAC of a BMC, remove power from server, then plug in power again, BMC will contact DHCP server also). When it tries to contact DHCP server, you will see the MAC in the shell on batman, like this:

Jun 23 19:00:59 batman dhcpd: DHCPDISCOVER from 08:00:44:22:67:bc via enp0s3

- MAC address of the server is 08:00:44:22:67:bc. You can update DHCP configuration file, and restart dhcpd service to make the compute node boot with the correct ip next time, using on batman after updating /etc/dhcp/dhcp.conf file:

systemctl restart dhcpd

Boot using PXE

With the installation we made on the management node, we can deploy nodes using PXE (PXE means booting not on local hardrive/cdrom but on ethernet interface, and try to get PXE server to deploy the OS).

With the configuration in this tutorial, if you let PXE service enabled (tftp on batman), nodes will never start OS installation as in the /var/lib/tftpboot/pxelinux.cfg/default we set default to local_disk boot. You can uncomment default centos7_x64 and comment default local_disk, and nodes will start OS installation when booting PXE. BUT, nodes will do it at all boot and enter into a loop of OS re-installation.

To avoid reinstalling the OS at each reboot, we need to set the file /var/lib/tftpboot/pxelinux.cfg/default to boot on local disk, and use a file dedicated to each node to force it to boot to OS installation, and remove this file before OS installation finish.

LOST ? I give an explicit example right below ![]()

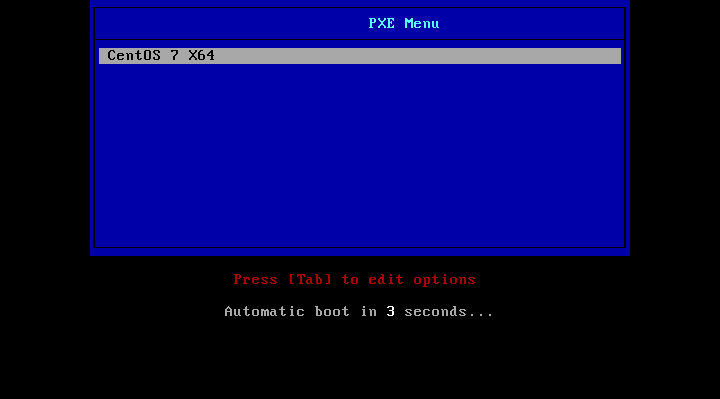

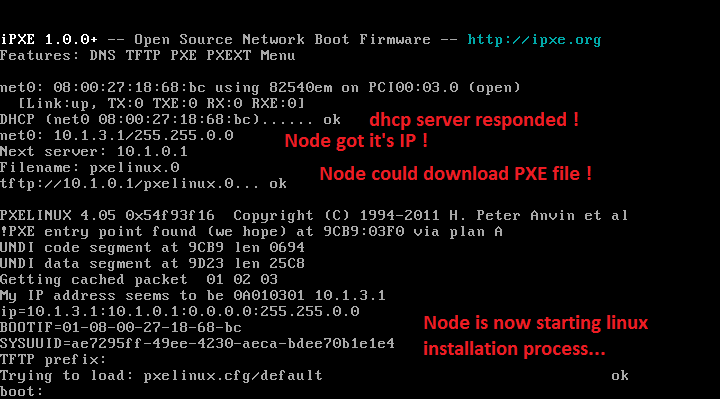

When a node boot in PXE, it first ask the dhcp server an ip address. For example here node got 10.1.3.1:

Node got also the address of the PXE server from the DHCP server. At this point, because it is configured to boot on PXE, the node will try the following negotiation with the tftp server:

Is there a specific file on the tftp server related to my sysuuid ?

If not, is there a specific file on the tftp server related to my MAC address ?

If not, is there a specific file on the tftp server related to my IP ?

If not, is there a specific file on the tftp server related to part of my IP ?

If not, is there a generic file on the tftp server ?

If not, I do not boot on PXE.

For example, a node with MAC address 88:99:aa:bb:cc:dd would search for the following files on the tftp server:

pxelinux.cfg/b8945908-d6a6-41a9-611d-74a6ab80b83d pxelinux.cfg/01-88-99-aa-bb-cc-dd pxelinux.cfg/C0A8025B pxelinux.cfg/C0A8025 pxelinux.cfg/C0A802 pxelinux.cfg/C0A80 pxelinux.cfg/C0A8 pxelinux.cfg/C0A pxelinux.cfg/C0 pxelinux.cfg/C pxelinux.cfg/default

(You can find more in: http://www.syslinux.org/wiki/index.php?title=PXELINUX)

We are going to use MAC addresses to manipulate nodes PXE, so second line in the example above.

The idea is the following: we are going to create a small script that catch a specific node MAC address in the dhcp server configuration file, then create a file for this node using it's MAC as source for the name and put it in the tftp server. This file will be the same than /var/lib/tftpboot/pxelinux.cfg/default, but it will be set to boot on OS installation instead of local disk. At this time, node will be rebooted (manually or using ipmi, as you wish). Then, we are going to monitor logs to catch when node is starting it's OS installation (i.e. when it will request the file we just created), then wait few seconds and destroy the file so that at next reboot (after OS installation), node boot on it's local disk.

For example, if node name is compute44 with MAC address 88:99:aa:bb:cc:dd in the dhcp configuration file, we are going to create a file /var/lib/tftpboot/pxelinux.cfg/01-88-99-aa-bb-cc-dd, with the following content:

default menu.c32 prompt 0 timeout 60 MENU TITLE sphen PXE default centos7_x64 LABEL centos7_x64 MENU LABEL CentOS 7 X64 KERNEL /netboot/vmlinuz APPEND initrd=/netboot/initrd.img inst.repo=http://10.0.0.1/iso ks=http://10.0.0.1/ks.cfg console=tty0 console=ttyS1,115200

Then, we restart compute44 and ask it to boot on PXE, then we monitor logs of tftp server and wait for node to request the file /var/lib/tftpboot/pxelinux.cfg/01-88-99-aa-bb-cc-dd, we wait 10s, and we remove this file. Next time, node will not find this file so it will go with /var/lib/tftpboot/pxelinux.cfg/default which tell to boot on local_disk.

Here is the script to do that, assuming you followed the tutorial and so in the dhcpd.conf file nodes are listed with the following pattern:

host compute44 {

hardware ethernet 88:99:aa:bb:cc:dd;

fixed-address 10.0.3.44;

option host-name "compute44";

}

Code is (with help inside):

#!/bin/bash # First we get node name as argument 1 nodename=$1 # Now we check node exist in dhcpd configuration file: grep "host $nodename {" /etc/dhcp/dhcpd.conf > /dev/null if [[ $? == 1 ]] then echo echo "Node $nodename does not exist in dhcpd configuration file. Please check syntax." echo exit fi # Now we get mac address of the node using grep. # grep -A4 means "OK grep, get me all 4 lines after the match, including the line matching the pattern". # Then we get line with mac address with | grep "hardware ethernet" # Then we get third element of this line (the mac) with | awk -F ' ' '{print $3}' # Then we remove the ; with | awk -F ';' '{print $1}' # Then we replace : by - with | tr ':' '-') # Finaly we get the full file path, with 01- before mac with nodemac=01-xx nodemac=01-$(grep -A4 "^host $nodename " /etc/dhcp/dhcpd.conf | grep "hardware ethernet" | awk -F ' ' '{print $3}' | awk -F ';' '{print $1}' | tr ':' '-') # Now we create the file with the content to boot on OS installation cat <<EOF >> /var/lib/tftpboot/pxelinux.cfg/$nodemac default menu.c32 prompt 0 timeout 60 MENU TITLE sphen PXE default centos7_x64 LABEL centos7_x64 MENU LABEL CentOS 7 X64 KERNEL /netboot/vmlinuz APPEND initrd=/netboot/initrd.img inst.repo=http://10.0.0.1/iso ks=http://10.0.0.1/ks.cfg console=tty0 console=ttyS1,115200 EOF echo "Created file /var/lib/tftpboot/pxelinux.cfg/$nodemac" echo "Please reboot node and ask it to boot on PXE" # Now we monitor tftp logs for this file to be requested. Time out command allow to kill the tail if it last more than 200 seconds. The tail -n 1 -f /var/log/messages is providing the grep just after a flux of /var/log/message and when grep with argument -m 1 will catch the string we are looking for, it will exit. echo "Waiting for node to start OS installation. Will wait 200s." timeout 200 tail -n 1 -f /var/log/messages |grep -m 1 "pxelinux.cfg/$nodemac" if [ $? -eq 0 ] then echo "Node started OS installation, removing file in 10s." sleep 10s echo rm -f /var/lib/tftpboot/pxelinux.cfg/$nodemac exit else echo "Node did not start OS installation, please check. Removing file." echo rm -f /var/lib/tftpboot/pxelinux.cfg/$nodemac exit fi

Assuming you called this file pxe_script.sh, then you can use it this way (assuming you did a chmod +x on it to make it executable):

./pxe_script.sh compute44

That's all for PXE boot process. You can monitor OS installation using a VGA screen or using ipmitool console.

Using IMPI to manage nodes

On the cluster, if your nodes have BMC or equivalent, you should be able to use ipmi to manage their boot behaviour and manage their power remotely.

Install ipmi-tools on batman node, and use the following command on each node BMC to ask them to boot on disk all the time, assuming password and users of the BMC are ADMIN and ADMIN:

ipmitool -I lanplus -H bmccompute1 -U ADMIN -P ADMIN chassis bootdev disk options=persistent

To force a node to boot on PXE at next boot (but not permanently), use:

ipmitool -I lanplus -H bmccompute1 -U ADMIN -P ADMIN chassis bootdev pxe

Note: you can replace pxe by bios to have the server boot on bios at next boot.

To access BIOS or watch the boot process, you need to use remote consol. Use:

ipmitool -H bmccompute1 -U ADMIN -P ADMIN -I lanplus -e \& sol activate

Once in the console, press “Enter” then “&” then “.” to exit.

If a session was left open, use the following to close it first:

ipmitool -H bmccompute1 -U ADMIN -P ADMIN -I lanplus sol deactivate

Managing users

We installed an ldap server, here is how to add users or remove users from it's database.

List current users

Simply use the following command on batman:

ldapsearch -x -b "dc=sphen,dc=local" -s sub "objectclass=posixAccount"

Add a user

Get a not already used id. To do so, use the following command to know which id are already used (remember, you must choose an id greater than 1000):

ldapsearch -x -b "dc=sphen,dc=local" -s sub "objectclass=posixAccount" | grep uidNumber

Then, to create the user bob for example, assuming id 1077 is free, create (anywhere as root) a file bob.ldif. First, generate a password hash from bob's password using:

slappasswd

Then fill the bob.ldif file using the following, replacing the hash by the one you just got:

dn: uid=bob,ou=People,dc=sphen,dc=local

objectClass: inetOrgPerson

objectClass: posixAccount

objectClass: shadowAccount

cn: bob

sn: Linux

userPassword: {SSHA}RXJnfvyeHf+G48tiWwT7YaEEddc5hBPw

loginShell: /bin/bash

uidNumber: 1077

gidNumber: 1077

homeDirectory: /home/bob

dn: cn=bob,ou=Group,dc=sphen,dc=local

objectClass: posixGroup

cn: bob

gidNumber: 1077

memberUid: bob

Be careful, bob is present 6 times in this file, and the id 3 times, don't forget one when changing by your user.

In this file, we define the user bob, and also the group bob, and we add bob in this group.

Now simply add bob to the ldap database using (will ask the ldap manager password, the one we defined when installing batman):

ldapadd -x -D cn=Manager,dc=sphen,dc=local -W -f bob.ldif

Finally, ssh on nfs node (the one exporting /home as nfs server, here nfs1) and create home directory for bob. So here, on nfs1:

mkdir /home/bob chown -R bob:bob /home/bob

Delete a user

To delete a user (here bob, the on we added just before), we need to remove the user and its group, using the following (will ask ldap manager password):

ldapdelete -v 'uid=bob,ou=People,dc=sphen,dc=local' -D cn=Manager,dc=sphen,dc=local -W ldapdelete -v 'cn=bob,ou=Group,dc=sphen,dc=local' -D cn=Manager,dc=sphen,dc=local -W

Don't forget to ssh on nfs node, and remove the home directory of bob. So here, on nfs1:

rm -R /home/bob

Slurm basic management

Small tip: you can use from any management or compute or login node the command (as root): srun -N 16 hostname (with 16 the number of hosts to check) to ask all available compute hosts to provide their hostname. Using srun launch a real job, so it is a very simple way to check slurm is working and users can launch jobs too.

Managing a basic slurm is not that hard. You must keep in mind the following:

- In slurm, compute nodes states are:

- down: the node do not respond, or is ready but not in the compute pool

- idle: the node is in the compute pool, waiting for jobs to execute

- drain: the node is in maintenance mode, because you asked it or because slurm put it here for a reason

To list the nodes and their state in slurm, use sinfo. With two compute nodes, you should get something like this:

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST computenodes up infinite 2 down compute[1-2]

So here, both nodes are down.

When a compute node join the slurm resources, it will not automatically enter the compute pool and be available for jobs (because we specified ReturnToServer=0 in the slurm.conf file, for security reasons). When a node is ready to be used, and that it execute the slurmd service, it will be considered down by slurm and must be manually put to idle.

For example here, our node compute1 is ready. We can put it in compute pool using:

scontrol update nodename=compute1 state=idle

Next sinfo gives:

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST computenodes up infinite 1 down compute2 computenodes up infinite 1 idle compute1

Compute1 is ready to execute jobs. Because it is alone here, we can try to launch a very simple job on it, the hostname command, using:

srun hostname

And if the node execute correctly the job, you should get:

compute1.sphen.local

When a compute node is down or in drain mode, you can use the -R argument to sinfo to know why. For example, here I didn't started compute2 node, so I get:

REASON USER TIMESTAMP NODELIST NO NETWORK ADDRESS F slurm 2016-07-15T04:52:45 compute2

When a compute node is up and in the pool, you can set it to drain for maintenance purposes using:

scontrol update nodename=compute1 state=drain reason="I am working on IB. Ox."

Then using sinfo -R again we have:

REASON USER TIMESTAMP NODELIST NO NETWORK ADDRESS F slurm 2016-07-15T04:52:45 compute2 I am working on IB. root 2016-07-20T11:11:58 compute1

Finaly, to force all slurm components (node and computes) to re-read configuration file if you made a modification (don't forget to update slurm.conf file on all nodes to be the same than on batman node), without interrupting current production and jobs, use:

scontrol reconfigure

That's all for basic management. How to launch a job will be described in user environment chapter.

Now for more advanced commands.

If you are using multiple partitions, to show partitions:

scontrol show partition -a

To create a partition on the fly:

scontrol create PartitionName=gpunodes Nodes=gpu1,gpu[3-5] State=UP Priority=1

To force sharing of nodes in the partition, add SHARED=FORCE:4 for max 4 users at thr same time.